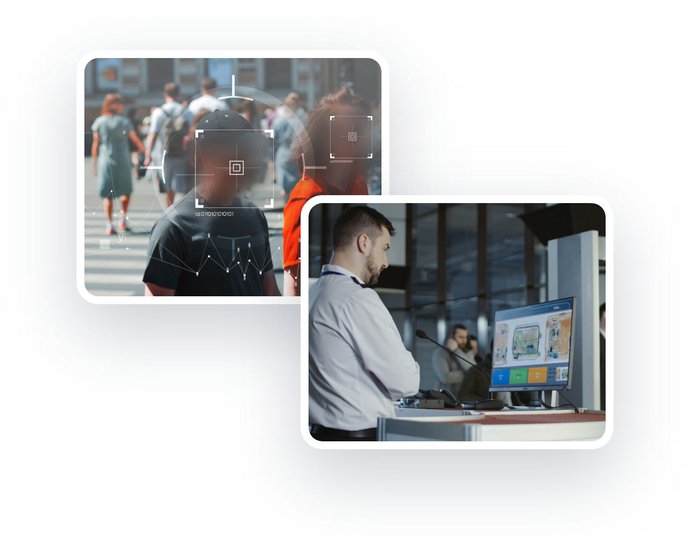

Daisy is a universal identification AI. It can be applied to anything: images, sounds, chemical spectra, even molecular data. It is fast, accurate and designed to be energy efficient. Classification models for training Daisy are built ethically, labour intensive pre-processing is not required.

Get in touch